Musician Germán Canyelles uses Low-tech Magazine’s bike generator to power his electric guitar. The guitar amplifier and pedals are plugged into an inverter connected to the 12V circuit of the bike generator. No batteries are used. Recorded at Akasha Hub, Barcelona.

Human Powered Electric Guitar

No Tech Reader #38

- I Made My Blog Solar-Powered, Then Things Escalated [Louwrentius] What happens when you try to run a solar powered website from your balcony in the Netherlands? “Only with a 740 Watt rated solar panel setup was I able to power my Raspberry Pi through the winter.”

- The Rising Chorus of Renewable Energy Skeptics [The Tyee] “The current prescription for stopping climate change with a mining boom to support an industrial production of renewable technologies is a dangerous course.”

- Half-farming, half-anything: Japan’s rural lifestyle revolution [Japan Times] “An increasing number of people from all age brackets are leaving behind their lives in Japan’s cramped megacities in favor of growing their own food sources, combined with a vocation that reflects their own unique interests and talents.” [Via Wrath of Gnon]

- The age of average [Alex Murrell] “Whether you’re in film or fashion, media or marketing, architecture, automotive or advertising, it doesn’t matter. Our visual culture is flatlining and the only cure is creativity.” [Via Ran Prieur]

- Nick Cave on Christ and the Devil [Unherd] “The idea that you can offend people, or that your songs can be dangerous enough for people to be scared of them, is exciting for me.”

- In an isolated world, humans need to dance together more than ever – but we’re running out of places to do it [The Guardian]

- A Humanism of the Abyss [The New Atlantis] “Illich and Sacks had in common a desire to reform the medical system — a desire that today still remains unfulfilled.”

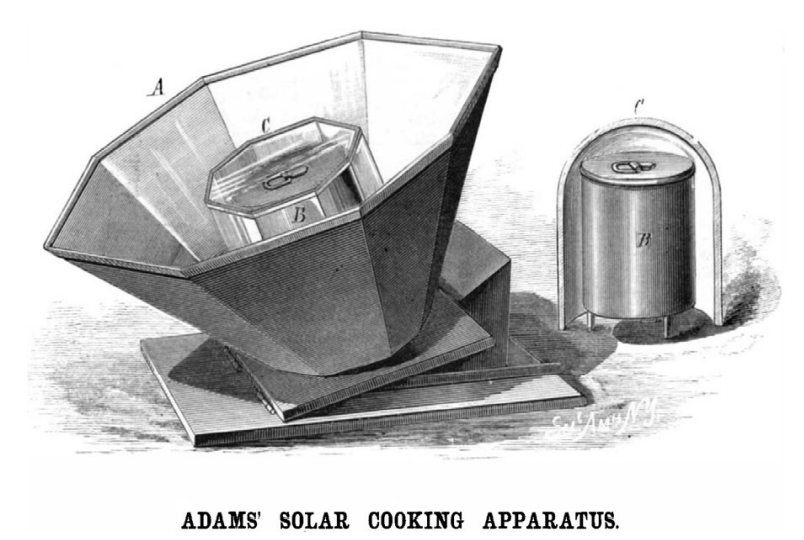

The poor woman’s energy: Low-modernist solar technologies and international development

“Solar energy often appears a technology without a history, perpetually new and oriented towards the future. This sense of perennial novelty has gone unchallenged by historians, who have generally neglected renewable energy outside the rich world and all but ignored solar energy everywhere. Left to industry professionals, solar history is typically narrated as a triumphalist tale of technical innovation centered in the global North. Such accounts often conflate solar energy with solar photovoltaics (PV) for direct electricity generation… It is tempting to draw a straight line from this innovation to the huge solar PV installations of the twenty-first century; India’s largest, Rajasthan’s US$1.4 billion Bhadla Solar

Park, sprawls across an area the size of Manhattan.”

“Rejecting the eschatology of climate change, such huge mega-projects have reignited the high-modernist idea of progress. They fuse an optimism about the possibilities of science, technology, and human innovation to deliver sustained improvements in economic production and the satisfaction of human needs. In this bright new age, endless rows of solar panels promise to square the circle of economic growth and environmental preservation by providing virtually infinite amounts of clean power for all—and empowerment for women to boot. These utopian ideas, the environmental humanists Imre Szeman and Darin Barney suggest, are coalescing into ‘one of the sharpest and most powerful of ideologies’ today…” [Read more…]

No Tech Reader #37

- These scientists lugged logs on their heads to resolve Chaco Canyon mystery. [Ars Tecnica] “Tumplines allow one to carry heavier weights over larger distances without getting fatigued.” Thanks to Matthew McNatt.

- Barbed Wire Telephone Lines Brought Isolated Homesteaders Together. [Atlas Obscura] “In some cases, as many as 20 telephones were wired together—all of which would ring simultaneously with each call, regardless of who was making it and who they were trying to reach. Agreed-upon codes—three short rings for you, two long rings for me—helped people know if the call was for them.”

- The vertical farming bubble is finally popping. [Fast Company] “In a typical cold climate, you would need about five acres of solar panels to grow one acre of lettuce”.

- Seaweed as a resilient food solution after a nuclear war. [ResearchGate] “We find seaweed can be grown in tropical oceans, even after nuclear war. The simulated growth is high enough to allow a scale up to an equivalent of 70 % of the global human caloric demand (spread among food, animal feed, and biofuels) in around 7 to 16 months, while only using a small fraction of the global ocean area. The results also show that the growth of seaweed increases with the severity of the nuclear war, as more nutrients become available due to increased vertical mixing. This means that seaweed has the potential to be a viable resilient food source for abrupt sunlight reduction scenarios.”

- Traditional Fishing Gears and Methods of the Bodo Tribes of Kokrajhar, Assam. [Fishery Technology] “The popularity and usage of some of the gears like Sahera, Baga, Borom Je and Dura Je were found declining, which may be attributed to increasing popularity of destructive fishing techniques like electric fishing, blast fishing and poisoning.”

- Low-tech approaches for sustainability: key principles from the literature and practice. [Sustainability: Science, Practice and Policy] ” This article develops a seven-principle framework to categorize low-tech concepts based on an abductive approach which included a literature review and interviews with low-tech actors.”

- Ministry of Truth: The secretive government units spying on your speech. [Big Brother Watch] “The internet contains masses of incorrect information – but this is a defining feature of an open forum, not a flaw.”

- We’ve lost the plot. [The Atlantic] “Our constant need for entertainment has blurred the line between fiction and reality—on television, in American politics, and in our everyday lives.”

Some low-tech computing links:

Artifical Intelligence and Climate Change

Quoted from: Couillet, Romain, Denis Trystram, and Thierry Ménissier. “The submerged part of the AI-ceberg.” IEEE Signal Processing Magazine, September 2022.

The energy consumption of a single training run of the latest (by 2020) deep neural networks dedicated to natural language processing exceeds 1,000 megawatt-hours (more than a month of computation on today’s most powerful clusters). This corresponds to an electricity bill of more than 100,000 euros (figures in the millions of euros are sometimes found) and 500 tons of CO2 emissions – that is, the carbon footprint equivalent to 500 transatlantic round trips from Paris to New York. In comparison, the human brain consumes in a month about 12 kWh, i.e., a hundred thousand times less, for tasks much more complex than natural language translation. [Read more…]

Hand-Cranked Canal Bridge in London

This London pedestrian bridge is entirely manual, with a hand crank to open it for boat traffic. In the video, the architects also discuss how the haptic feedback provided by hand cranking allows issues to be identified and prevents damage. Thanks to Mathew Lippincott.